Penetration Testing in the Age of Large Language Models:

Large language models are reshaping cybersecurity, offering advanced tools for penetration testers while posing new risks if exploited by attackers. Can AI bolster defenses without empowering adversaries? As both asset and threat, LLMs challenge us to rethink how we approach security in the digital age. But as businesses rush to harness this power, they must pause to ask a critical question: Is your LLM implementation secure? This question lies at the heart of Orasec’s mission—ensuring your AI-powered tools are secure, effective, and resilient against cyber threats.

Meet “Alex,” Your AI Assistant

Imagine Alex, an AI assistant integrated into your company, designed to manage support tickets and effortlessly answer customer queries. Alex is the dream employee—knowledgeable, responsive, and diligent. But there’s a twist: Alex has administrative-level access to your internal systems. This raises questions like: Can Alex be manipulated? Could an attacker leverage Alex to access confidential data, exploit hidden vulnerabilities, or perform unintended actions?

This potential makes penetration testing a must-have for any organization adopting LLMs. Orasec specializes in identifying security risks within LLMs, ensuring safe deployment and robust defense against emerging threats. Here’s a look at the key areas we focus on

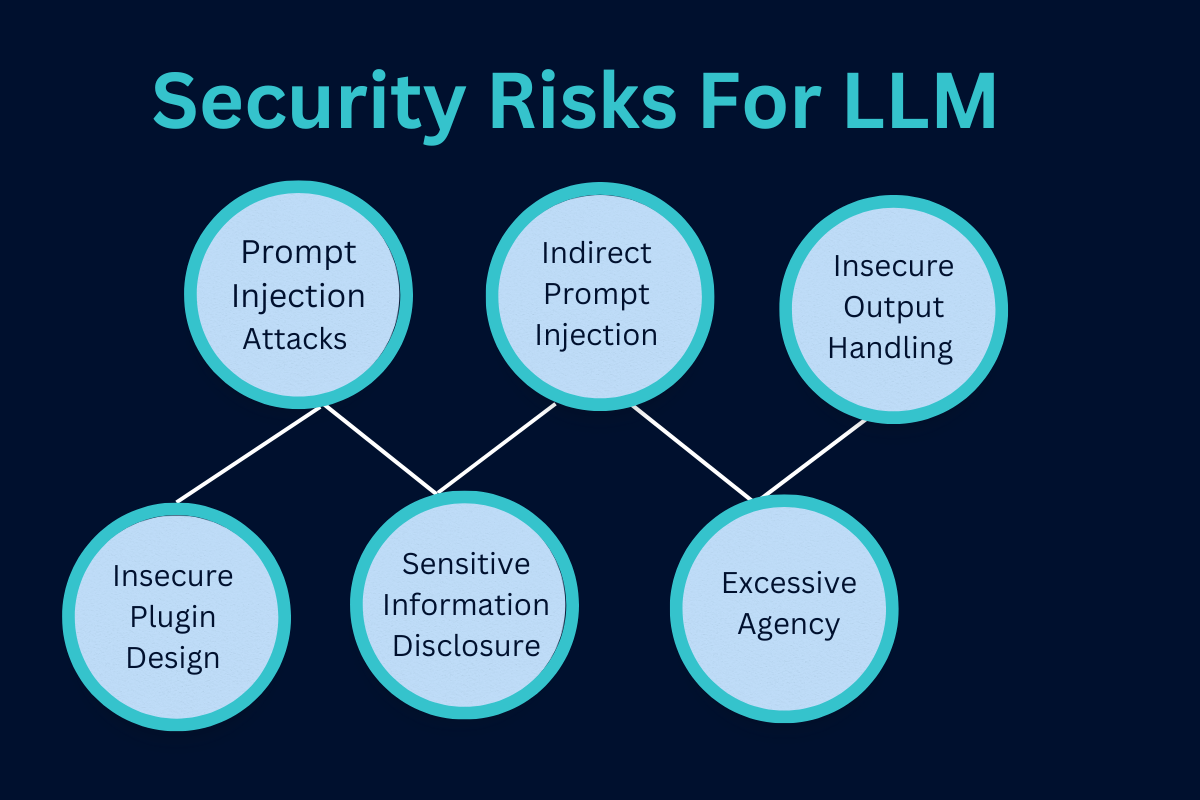

The Top Security Risks for LLMs

Prompt Injection Attacks

Attackers can manipulate an LLM to perform unintended actions, a tactic known as prompt injection or “jailbreaking.” By cleverly crafting prompts, attackers may trick Alex into retrieving confidential information or performing unauthorized tasks. Prompt injection is a growing concern for LLMs as hackers learn to exploit context boundaries in models like Alex.

Indirect Prompt Injection

In this variant, attackers embed malicious prompts in sources Alex will later read. For example, imagine an attacker embedding harmful instructions in a customer review. When Alex analyzes this review, it may inadvertently execute the malicious command, creating a security breach without the user’s knowledge.

Insecure Output Handling

LLMs often interact with other systems and may lack adequate filtering of their responses. Without secure output handling, they can introduce vulnerabilities like cross-site scripting (XSS) or cross-site request forgery (CSRF) attacks, exposing your systems and users to potential risks.

Insecure Plugin Design

Plugins expand an LLM’s abilities but can create new vulnerabilities if not adequately secured. Poorly designed plugins are susceptible to prompt injection attacks, allowing attackers to exploit additional data or access unauthorized functionalities. Orasec’s security team analyzes plugin structures to prevent such breaches.

Sensitive Information Disclosure

If not carefully managed, LLMs can inadvertently disclose sensitive information, as seen in cases like the infamous Samsung data leak. By setting strict data-sharing guidelines, Orasec helps companies secure their models against accidental disclosures.

Excessive Agency

Granting high permissions to LLMs enables enhanced automation, but it also opens the door for unintended actions. By evaluating each LLM’s required permissions, Orasec helps minimize potential misuse, ensuring that “Alex” can only act within predefined boundaries.

Emerging Threats and Risks

- Automated Social Engineering: LLMs can draft highly convincing phishing emails or social engineering scripts, personalizing messages to target specific individuals with astonishing accuracy.

- Weaponized Code Generation: LLMs can generate potentially harmful code, including scripts for common exploits, with just a few prompts. While there are safeguards, there are workarounds that some attackers exploit.

- Adaptive Malware Development: By analyzing existing patterns, LLMs can help attackers create more sophisticated malware or refine existing types. This capability makes malware detection harder as the attacks continuously evolve.

Why Large Language Models Are a Game-Changer

LLMs are trained on vast amounts of data, making them capable of generating realistic language patterns, identifying vulnerabilities, and even simulating attacks. This power is both a blessing and a curse in penetration testing. On one hand, these models can aid ethical hackers in creating custom scripts or understanding complex vulnerabilities. On the other hand, attackers can leverage these same tools to uncover exploits faster than ever.

This technological duality presents a fresh set of challenges. How do we stay one step ahead of bad actors using the same tools to refine their attacks? At Orasec, we believe that staying agile and updating our methodologies is key to countering these evolving threats.

In conclusion, the intersection of large language models and penetration testing represents a crucial frontier for cybersecurity in today’s digital age. By understanding the unique vulnerabilities introduced by LLMs and embedding robust testing methodologies into their security policies, organizations can safeguard their invaluable assets and maintain customer trust in an era where data breaches can be both pervasive and costly. Embracing proactive security measures is not merely a necessity—it’s a vital component of sustainable business success.

The Future of Penetration Testing

As cybersecurity professionals, we need to think differently. Is it time for a new type of “AI-informed” penetration testing? Should our tests not only target systems but also anticipate AI-driven threats? These are the questions Orasec is exploring as we aim to stay at the forefront of cybersecurity innovation.

In this age of AI-driven possibilities, vigilance is essential. By adapting and rethinking our strategies, we can continue to protect and secure against even the most advanced threats. After all, in cybersecurity, evolution is the only constant. Are you ready? https://orasec.co/